[ad_1]

Introduction

Managing streaming knowledge from a supply system, like PostgreSQL, MongoDB or DynamoDB, right into a downstream system for real-time search and analytics is a problem for a lot of groups. The stream of knowledge typically entails complicated ETL tooling in addition to self-managing integrations to make sure that excessive quantity writes, together with updates and deletes, don’t rack up CPU or influence efficiency of the tip software.

For a system like Elasticsearch, engineers have to have in-depth information of the underlying structure in an effort to effectively ingest streaming knowledge. Elasticsearch was designed for log analytics the place knowledge just isn’t regularly altering, posing further challenges when coping with transactional knowledge.

Rockset, alternatively, is a cloud-native database, eradicating a number of the tooling and overhead required to get knowledge into the system. As Rockset is purpose-built for real-time search and analytics, it has additionally been designed for field-level mutability, reducing the CPU required to course of inserts, updates and deletes.

On this weblog, we’ll evaluate and distinction how Elasticsearch and Rockset deal with knowledge ingestion in addition to present sensible strategies for utilizing these programs for real-time analytics.

Elasticsearch

Knowledge Ingestion in Elasticsearch

Whereas there are lots of methods to ingest knowledge into Elasticsearch, we cowl three frequent strategies for real-time search and analytics:

- Ingest knowledge from a relational database into Elasticsearch utilizing the Logstash JDBC enter plugin

- Ingest knowledge from Kafka into Elasticsearch utilizing the Kafka Elasticsearch Service Sink Connector

- Ingest knowledge straight from the appliance into Elasticsearch utilizing the REST API and consumer libraries

Ingest knowledge from a relational database into Elasticsearch utilizing the Logstash JDBC enter plugin

The Logstash JDBC enter plugin can be utilized to dump knowledge from a relational database like PostgreSQL or MySQL to Elasticsearch for search and analytics.

Logstash is an occasion processing pipeline that ingests and transforms knowledge earlier than sending it to Elasticsearch. Logstash presents a JDBC enter plugin that polls a relational database, like PostgreSQL or MySQL, for inserts and updates periodically. To make use of this service, your relational database wants to offer timestamped information that may be learn by Logstash to find out which modifications have occurred.

This ingestion method works nicely for inserts and updates however further concerns are wanted for deletions. That’s as a result of it’s not attainable for Logstash to find out what’s been deleted in your OLTP database. Customers can get round this limitation by implementing delicate deletes, the place a flag is utilized to the deleted document and that’s used to filter out knowledge at question time. Or, they’ll periodically scan their relational database to get entry to the freshest information and reindex the info in Elasticsearch.

Ingest knowledge from Kafka into Elasticsearch utilizing the Kafka Elasticsearch Sink Connector

It’s additionally frequent to make use of an occasion streaming platform like Kafka to ship knowledge from supply programs into Elasticsearch for real-time search and analytics.

Confluent and Elastic partnered within the launch of the Kafka Elasticsearch Service Sink Connector, obtainable to firms utilizing each the managed Confluent Kafka and Elastic Elasticsearch choices. The connector does require putting in and managing further tooling, Kafka Join.

Utilizing the connector, you’ll be able to map every subject in Kafka to a single index kind in Elasticsearch. If dynamic typing is used because the index kind, then Elasticsearch does help some schema modifications akin to including fields, eradicating fields and altering sorts.

One of many challenges that does come up in utilizing Kafka is needing to reindex the info in Elasticsearch once you wish to modify the analyzer, tokenizer or listed fields. It’s because the mapping can’t be modified as soon as it’s already outlined. To carry out a reindex of the info, you’ll need to double write to the unique index and the brand new index, transfer the info from the unique index to the brand new index after which cease the unique connector job.

If you don’t use managed companies from Confluent or Elastic, you should use the open-source Kafka plugin for Logstash to ship knowledge to Elasticsearch.

Ingest knowledge straight from the appliance into Elasticsearch utilizing the REST API and consumer libraries

Elasticsearch presents the power to make use of supported consumer libraries together with Java, Javascript, Ruby, Go, Python and extra to ingest knowledge by way of the REST API straight out of your software. One of many challenges in utilizing a consumer library is that it must be configured to work with queueing and back-pressure within the case when Elasticsearch is unable to deal with the ingest load. And not using a queueing system in place, there may be the potential for knowledge loss into Elasticsearch.

Updates, Inserts and Deletes in Elasticsearch

Elasticsearch has an Replace API that can be utilized to course of updates and deletes. The Replace API reduces the variety of community journeys and potential for model conflicts. The Replace API retrieves the present doc from the index, processes the change after which indexes the info once more. That stated, Elasticsearch doesn’t provide in-place updates or deletes. So, all the doc nonetheless have to be reindexed, a CPU intensive operation.

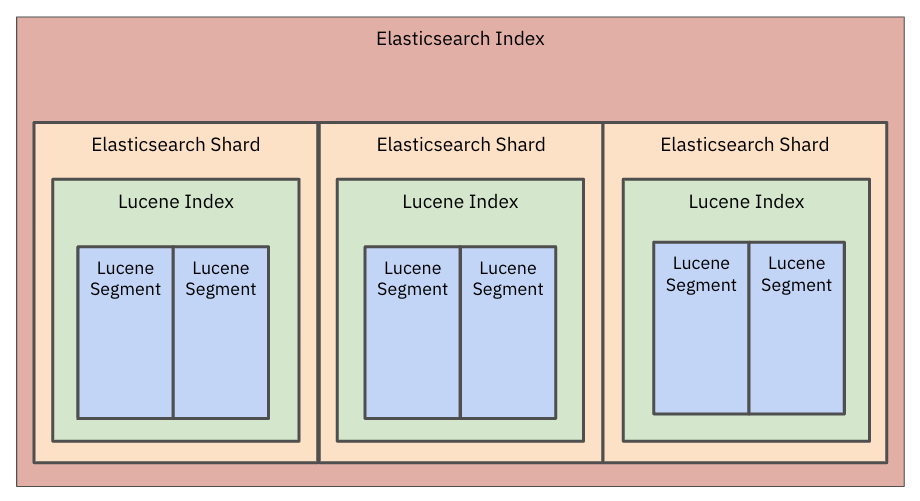

Below the hood, Elasticsearch knowledge is saved in a Lucene index and that index is damaged down into smaller segments. Every phase is immutable so paperwork can’t be modified. When an replace is made, the previous doc is marked for deletion and a brand new doc is merged to kind a brand new phase. With a view to use the up to date doc, the entire analyzers should be run which might additionally improve CPU utilization. It’s frequent for patrons with continually altering knowledge to see index merges eat up a substantial quantity of their general Elasticsearch compute invoice.

Picture 1: Elasticsearch knowledge is saved in a Lucene index and that index is damaged down into smaller segments.

Given the quantity of sources required, Elastic recommends limiting the variety of updates into Elasticsearch. A reference buyer of Elasticsearch, Bol.com, used Elasticsearch for website search as a part of their e-commerce platform. Bol.com had roughly 700K updates per day made to their choices together with content material, pricing and availability modifications. They initially wished an answer that stayed in sync with any modifications as they occurred. However, given the influence of updates on Elasticsearch system efficiency, they opted to permit for 15-20 minute delays. The batching of paperwork into Elasticsearch ensured constant question efficiency.

Deletions and Section Merge Challenges in Elasticsearch

In Elasticsearch, there will be challenges associated to the deletion of previous paperwork and the reclaiming of area.

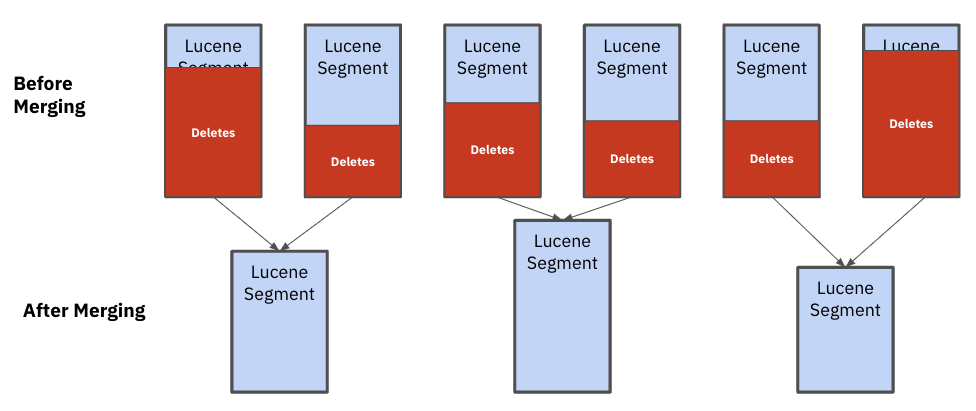

Elasticsearch completes a phase merge within the background when there are numerous segments in an index or there are a number of paperwork in a phase which are marked for deletion. A phase merge is when paperwork are copied from present segments right into a newly shaped phase and the remaining segments are deleted. Sadly, Lucene just isn’t good at sizing the segments that should be merged, probably creating uneven segments that influence efficiency and stability.

Picture 2: After merging, you’ll be able to see that the Lucene segments are all totally different sizes. These uneven segments influence efficiency and stability

That’s as a result of Elasticsearch assumes all paperwork are uniformly sized and makes merge choices primarily based on the variety of paperwork deleted. When coping with heterogeneous doc sizes, as is usually the case in multi-tenant functions, some segments will develop quicker in measurement than others, slowing down efficiency for the biggest prospects on the appliance. In these instances, the one treatment is to reindex a considerable amount of knowledge.

Duplicate Challenges in Elasticsearch

Elasticsearch makes use of a primary-backup mannequin for replication. The first duplicate processes an incoming write operation after which forwards the operation to its replicas. Every duplicate receives this operation and re-indexes the info regionally once more. Which means each duplicate independently spends pricey compute sources to re-index the identical doc over and over. If there are n replicas, Elastic would spend n occasions the cpu to index the identical doc. This will exacerbate the quantity of knowledge that must be reindexed when an replace or insert happens.

Bulk API and Queue Challenges in Elasticsearch

Whereas you should use the Replace API in Elasticsearch, it’s typically really useful to batch frequent modifications utilizing the Bulk API. When utilizing the Bulk API, engineering groups will typically have to create and handle a queue to streamline updates into the system.

A queue is unbiased of Elasticsearch and can should be configured and managed. The queue will consolidate the inserts, updates and deletes to the system inside a selected time interval, say quarter-hour, to restrict the influence on Elasticsearch. The queuing system will even apply a throttle when the speed of insertion is excessive to make sure software stability. Whereas queues are useful for updates, they don’t seem to be good at figuring out when there are a number of knowledge modifications that require a full reindex of the info. This will happen at any time if there are a number of updates to the system. It’s normal for groups working Elastic at scale to have devoted operations members managing and tuning their queues every day.

Reindexing in Elasticsearch

As talked about within the earlier part, when there are a slew of updates or you should change the index mappings then a reindex of knowledge happens. Reindexing is error inclined and does have the potential to take down a cluster. What’s much more frightful, is that reindexing can occur at any time.

In case you do wish to change your mappings, you have got extra management over the time that reindexing happens. Elasticsearch has a reindex API to create a brand new index and an Aliases API to make sure that there isn’t any downtime when a brand new index is being created. With an alias API, queries are routed to the alias, or the previous index, as the brand new index is being created. When the brand new index is prepared, the aliases API will convert to learn knowledge from the brand new index.

With the aliases API, it’s nonetheless difficult to maintain the brand new index in sync with the newest knowledge. That’s as a result of Elasticsearch can solely write knowledge to 1 index. So, you’ll need to configure the info pipeline upstream to double write into the brand new and the previous index.

Rockset

Knowledge Ingestion in Rockset

Rockset makes use of built-in connectors to maintain your knowledge in sync with supply programs. Rockset’s managed connectors are tuned for every kind of knowledge supply in order that knowledge will be ingested and made queryable inside 2 seconds. This avoids guide pipelines that add latency or can solely ingest knowledge in micro-batches, say each quarter-hour.

At a excessive degree, Rockset presents built-in connectors to OLTP databases, knowledge streams and knowledge lakes and warehouses. Right here’s how they work:

Constructed-In Connectors to OLTP Databases

Rockset does an preliminary scan of your tables in your OLTP database after which makes use of CDC streams to remain in sync with the newest knowledge, with knowledge being made obtainable for querying inside 2 seconds of when it was generated by the supply system.

Constructed-In Connectors to Knowledge Streams

With knowledge streams like Kafka or Kinesis, Rockset repeatedly ingests any new subjects utilizing a pull-based integration that requires no tuning in Kafka or Kinesis.

Constructed-In Connectors to Knowledge Lakes and Warehouses

Rockset continually screens for updates and ingests any new objects from knowledge lakes like S3 buckets. We typically discover that groups wish to be a part of real-time streams with knowledge from their knowledge lakes for real-time analytics.

Updates, Inserts and Deletes in Rockset

Rockset has a distributed structure optimized to effectively index knowledge in parallel throughout a number of machines.

Rockset is a document-sharded database, so it writes complete paperwork to a single machine, reasonably than splitting it aside and sending the totally different fields to totally different machines. Due to this, it’s fast so as to add new paperwork for inserts or find present paperwork, primarily based on major key _id for updates and deletes.

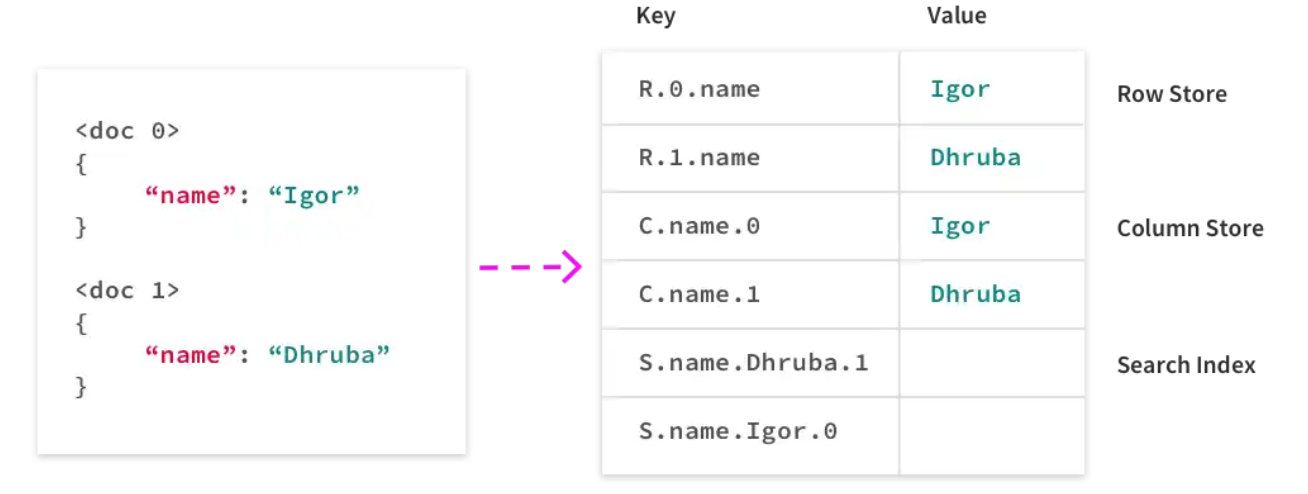

Just like Elasticsearch, Rockset makes use of indexes to shortly and effectively retrieve knowledge when it’s queried. Not like different databases or search engines like google and yahoo although, Rockset indexes knowledge at ingest time in a Converged Index, an index that mixes a column retailer, search index and row retailer. The Converged Index shops the entire values within the fields as a collection of key-value pairs. Within the instance under you’ll be able to see a doc after which how it’s saved in Rockset.

Picture 3: Rockset’s Converged Index shops the entire values within the fields as a collection of key-value pairs in a search index, column retailer and row retailer.

Below the hood, Rockset makes use of RocksDB, a high-performance key-value retailer that makes mutations trivial. RocksDB helps atomic writes and deletes throughout totally different keys. If an replace is available in for the identify subject of a doc, precisely 3 keys should be up to date, one per index. Indexes for different fields within the doc are unaffected, that means Rockset can effectively course of updates as a substitute of losing cycles updating indexes for complete paperwork each time.

Nested paperwork and arrays are additionally first-class knowledge sorts in Rockset, that means the identical replace course of applies to them as nicely, making Rockset nicely suited to updates on knowledge saved in fashionable codecs like JSON and Avro.

The staff at Rockset has additionally constructed a number of customized extensions for RocksDB to deal with excessive writes and heavy reads, a typical sample in real-time analytics workloads. A type of extensions is distant compactions which introduces a clear separation of question compute and indexing compute to RocksDB Cloud. This permits Rockset to keep away from writes interfering with reads. Attributable to these enhancements, Rockset can scale its writes in accordance with prospects’ wants and make recent knowledge obtainable for querying at the same time as mutations happen within the background.

Updates, Inserts and Deletes Utilizing the Rockset API

Customers of Rockset can use the default _id subject or specify a selected subject to be the first key. This subject allows a doc or part of a doc to be overwritten. The distinction between Rockset and Elasticsearch is that Rockset can replace the worth of a person subject with out requiring a complete doc to be reindexed.

To replace present paperwork in a group utilizing the Rockset API, you can also make requests to the Patch Paperwork endpoint. For every present doc you want to replace, you simply specify the _id subject and an inventory of patch operations to be utilized to the doc.

The Rockset API additionally exposes an Add Paperwork endpoint so to insert knowledge straight into your collections out of your software code. To delete present paperwork, merely specify the _id fields of the paperwork you want to take away and make a request to the Delete Paperwork endpoint of the Rockset API.

Dealing with Replicas in Rockset

Not like in Elasticsearch, just one duplicate in Rockset does the indexing and compaction utilizing RocksDB distant compactions. This reduces the quantity of CPU required for indexing, particularly when a number of replicas are getting used for sturdiness.

Reindexing in Rockset

At ingest time in Rockset, you should use an ingest transformation to specify the specified knowledge transformations to use in your uncooked supply knowledge. In case you want to change the ingest transformation at a later date, you’ll need to reindex your knowledge.

That stated, Rockset allows schemaless ingest and dynamically sorts the values of each subject of knowledge. If the scale and form of the info or queries change, Rockset will proceed to be performant and never require knowledge to be reindexed.

Rockset can scale to tons of of terabytes of knowledge with out ever needing to be reindexed. This goes again to the sharding technique of Rockset. When the compute {that a} buyer allocates of their Digital Occasion will increase, a subset of shards are shuffled to realize a greater distribution throughout the cluster, permitting for extra parallelized, quicker indexing and question execution. In consequence, reindexing doesn’t have to happen in these situations.

Conclusion

Elasticsearch was designed for log analytics the place knowledge just isn’t being regularly up to date, inserted or deleted. Over time, groups have expanded their use for Elasticsearch, typically utilizing Elasticsearch as a secondary knowledge retailer and indexing engine for real-time analytics on continually altering transactional knowledge. This could be a pricey endeavor, particularly for groups optimizing for real-time ingestion of knowledge in addition to contain a substantial quantity of administration overhead.

Rockset, alternatively, was designed for real-time analytics and to make new knowledge obtainable for querying inside 2 seconds of when it was generated. To resolve this use case, Rockset helps in-place inserts, updates and deletes, saving on compute and limiting using reindexing of paperwork. Rockset additionally acknowledges the administration overhead of connectors and ingestion and takes a platform method, incorporating real-time connectors into its cloud providing.

General, we’ve seen firms that migrate from Elasticsearch to Rockset for real-time analytics save 44% simply on their compute invoice. Be a part of the wave of engineering groups switching from Elasticsearch to Rockset in days. Begin your free trial right this moment.

[ad_2]