[ad_1]

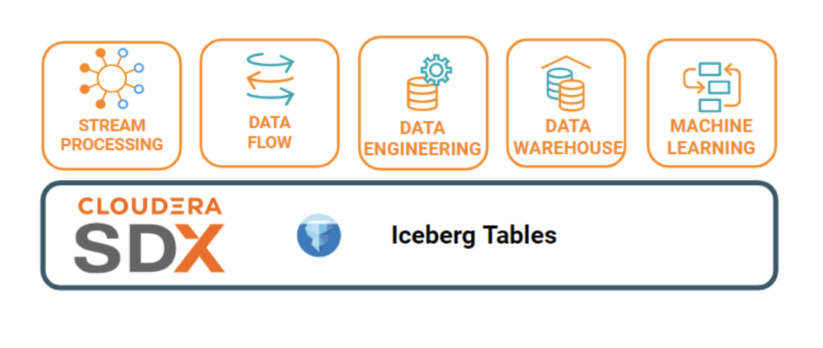

Since we introduced the final availability of Apache Iceberg in Cloudera Knowledge Platform (CDP), we’re excited to see prospects testing their analytic workloads on Iceberg. We’re additionally receiving a number of requests to share extra particulars on how key information companies in CDP, akin to Cloudera Knowledge Warehousing (CDW), Cloudera Knowledge Engineering (CDE), Cloudera Machine Studying (CML), Cloudera Knowledge Stream (CDF) and Cloudera Stream Processing (CSP) combine with the Apache Iceberg desk format and the simplest technique to get began. On this weblog, we’ll share with you intimately how Cloudera integrates core compute engines together with Apache Hive and Apache Impala in Cloudera Knowledge Warehouse with Iceberg. We’ll publish observe up blogs for different information companies.

Iceberg fundamentals

Iceberg is an open desk format designed for big analytic workloads. As described in Iceberg Introduction it helps schema evolution, hidden partitioning, partition format evolution and time journey. Each desk change creates an Iceberg snapshot, this helps to resolve concurrency points and permits readers to scan a secure desk state each time.

The Apache Iceberg venture additionally develops an implementation of the specification within the type of a Java library. This library is built-in by execution engines akin to Impala, Hive and Spark. The brand new function this weblog put up is aiming to debate about Iceberg V2 format (model 2), because the Iceberg desk specification explains, the V1 format aimed to assist giant analytic information tables, whereas V2 aimed so as to add row stage deletes and updates.

In a bit extra element, Iceberg V1 added assist for creating, updating, deleting and inserting information into tables. The desk metadata is saved subsequent to the information recordsdata below a metadata listing, which permits a number of engines to make use of the identical desk concurrently.

Iceberg V2

With Iceberg V2 it’s potential to do row-level modifications with out rewriting the information recordsdata. The thought is to retailer details about the deleted information in so-called delete recordsdata. We selected to make use of place delete recordsdata which offer the perfect efficiency for queries. These recordsdata retailer the file paths and positions of the deleted information. Throughout queries the question engines scan each the information recordsdata and delete recordsdata belonging to the identical snapshot and merge them collectively (i.e. eliminating the deleted rows from the output).

Updating row values is achievable by doing a DELETE plus an INSERT operation in a single transaction.

Compacting the tables merges the modifications/deletes with the precise information recordsdata to enhance efficiency of reads. To compact the tables use CDE Spark.

By default, Hive and Impala nonetheless create Iceberg V1 tables. To create a V2 desk, customers must set desk property ‘format-version’ to ‘2’. Present Iceberg V1 tables could be upgraded to V2 tables by merely setting desk property ‘format-version’ to ‘2’. Hive and Impala are suitable with each Iceberg format variations, i.e. customers can nonetheless use their outdated V1 tables; V2 tables merely have extra options.

Use instances

Complying with particular elements of laws akin to GDPR (Normal Knowledge Safety Regulation) and CCPA (California Shopper Privateness Act) implies that databases want to have the ability to delete private information upon buyer requests. With delete recordsdata we are able to simply mark the information belonging to particular folks. Then common compaction jobs can bodily erase the deleted information.

One other trivial use case is when present information have to be modified to right improper information or replace outdated values.

How one can Replace and Delete

Presently solely Hive can do row stage modifications. Impala can learn the up to date tables and it will probably additionally INSERT information into Iceberg V2 tables.

To take away all information belonging to a single buyer:

DELETE FROM ice_tbl WHERE user_id = 1234;

To replace a column worth in a particular document:

UPDATE ice_tbl SET col_v = col_v + 1 WHERE id = 4321;

Use the MERGE INTO assertion to replace an Iceberg desk based mostly on a staging desk:

MERGE INTO buyer USING (SELECT * FROM new_customer_stage) sub ON sub.id = buyer.id WHEN MATCHED THEN UPDATE SET identify = sub.identify, state = sub.new_state WHEN NOT MATCHED THEN INSERT VALUES (sub.id, sub.identify, sub.state);

When to not use Iceberg

Iceberg tables function atomic DELETE and UPDATE operations, making them just like conventional RDBMS techniques. Nevertheless, it’s essential to notice that they don’t seem to be appropriate for OLTP workloads as they don’t seem to be designed to deal with excessive frequency transactions. As a substitute, Iceberg is meant for managing giant, sometimes altering datasets.

If one is on the lookout for an answer that may deal with very giant datasets and frequent updates, we advocate utilizing Apache Kudu.

CDW fundamentals

Cloudera Knowledge Warehouse (CDW) Knowledge Service is a Kubernetes-based software for creating extremely performant, unbiased, self-service information warehouses within the cloud that may be scaled dynamically and upgraded independently. CDW helps streamlined software improvement with open requirements, open file and desk codecs, and commonplace APIs. CDW leverages Apache Iceberg, Apache Impala, and Apache Hive to supply broad protection, enabling the best-optimized set of capabilities for every workload.

CDW separates the compute (Digital Warehouses) and metadata (DB catalogs) by operating them in unbiased Kubernetes pods. Compute within the type of Hive LLAP or Impala Digital Warehouses could be provisioned on-demand, auto-scaled based mostly on question load, and de-provisioned when idle thus decreasing cloud prices and offering constant fast outcomes with excessive concurrency, HA, and question isolation. Thus simplifying information exploration, ETL and deriving analytical insights on any enterprise information throughout the Knowledge Lake.

CDW additionally simplifies administration by making multi-tenancy safe and manageable. It permits us to independently improve the Digital Warehouses and Database Catalogs. Via tenant isolation, CDW can course of workloads that don’t intrude with one another, so everybody meets report timelines whereas controlling cloud prices.

How one can use

Within the following sections we’re going to present a number of examples of the best way to create Iceberg V2 tables and the best way to work together with them. We’ll see how one can insert information, change the schema or the partition format, the best way to take away/replace rows, do time-travel and snapshot administration.

Hive:

Making a Iceberg V2 Desk

A Hive Iceberg V2 desk could be created by specifying the format-version as 2 within the desk properties.

Ex.

CREATE EXTERNAL TABLE TBL_ICEBERG_PART(ID INT, NAME STRING) PARTITIONED BY (DEPT STRING) STORED BY ICEBERG STORED AS PARQUET TBLPROPERTIES ('FORMAT-VERSION'='2');

|

- CREATE TABLE AS SELECT (CTAS)

CREATE EXTERNAL TABLE CTAS_ICEBERG_SOURCE STORED BY ICEBERG AS SELECT * FROM TBL_ICEBERG_PART;

|

CREATE EXTERNAL TABLE ICEBERG_CTLT_TARGET LIKE ICEBERG_CTLT_SOURCE STORED BY ICEBERG;

|

Ingesting Knowledge

Knowledge into an Iceberg V2 desk could be inserted equally like regular Hive tables

Ex:

INSERT INTO TABLE TBL_ICEBERG_PART VALUES (1,'ONE','MATH'), (2, 'ONE','PHYSICS'), (3,'ONE','CHEMISTRY'), (4,'TWO','MATH'), (5, 'TWO','PHYSICS'), (6,'TWO','CHEMISTRY');

|

INSERT OVERWRITE TABLE CTLT_ICEBERG_SOURCE SELECT * FROM TBL_ICEBERG_PART;

|

MERGE INTO TBL_ICEBERG_PART USING TBL_ICEBERG_PART_2 ON TBL_ICEBERG_PART.ID = TBL_ICEBERG_PART_2.ID WHEN NOT MATCHED THEN INSERT VALUES (TBL_ICEBERG_PART_2.ID, TBL_ICEBERG_PART_2.NAME, TBL_ICEBERG_PART_2.DEPT); |

Delete & Updates:

V2 tables permit row stage deletes and updates equally just like the Hive-ACID tables.

Ex:

DELETE FROM TBL_ICEBERG_PART WHERE DEPT = 'MATH';

|

UPDATE TBL_ICEBERG_PART SET DEPT='BIOLOGY' WHERE DEPT = 'PHYSICS' OR ID = 6;

|

Querying Iceberg tables:

Hive helps each vectorized and non vectorized reads for Iceberg V2 tables, Vectorization could be enabled usually utilizing the next configs:

- set hive.llap.io.reminiscence.mode=cache;

- set hive.llap.io.enabled=true;

- set hive.vectorized.execution.enabled=true

SELECT COUNT(*) FROM TBL_ICEBERG_PART;

|

Hive permits us to question desk information for particular snapshot variations.

SELECT * FROM TBL_ICEBERG_PART FOR SYSTEM_VERSION AS OF 7521248990126549311;

|

Snapshot Administration

Hive permits a number of operations relating to snapshot administration, like:

ALTER TABLE TBL_ICEBERG_PART EXECUTE EXPIRE_SNAPSHOTS('2021-12-09 05:39:18.689000000');

|

ALTER TABLE TBL_ICEBERG_PART EXECUTE SET_CURRENT_SNAPSHOT (7521248990126549311);

|

ALTER TABLE TBL_ICEBERG_PART EXECUTE ROLLBACK(3088747670581784990); |

Alter Iceberg tables

ALTER TABLE … ADD COLUMNS (...); (Add a column) ALTER TABLE … REPLACE COLUMNS (...);(Drop column by utilizing REPLACE COLUMN to take away the outdated column) ALTER TABLE … CHANGE COLUMN … AFTER …; (Reorder columns) |

ALTER TABLE TBL_ICEBERG_PART SET PARTITION SPEC (NAME);

|

Materialized Views

- Creating Materialized Views:

CREATE MATERIALIZED VIEW MAT_ICEBERG AS SELECT ID, NAME FROM TBL_ICEBERG_PART ;

|

ALTER MATERIALIZED VIEW MAT_ICEBERG REBUILD;

|

- Querying Materialized Views:

SELECT * FROM MAT_ICEBERG;

|

Impala

Apache Impala is an open supply, distributed, massively parallel SQL question engine with its backend executors written in C++, and its frontend (analyzer, planner) written in java. Impala makes use of the Iceberg Java library to get details about Iceberg tables throughout question evaluation and planning. Alternatively, for question execution the excessive performing C++ executors are in cost. This implies queries on Iceberg tables are lightning quick.

Impala helps the next statements on Iceberg tables.

Creating Iceberg tables

CREATE TABLE ice_t(id INT, identify STRING, dept STRING) PARTITIONED BY SPEC (bucket(19, id), dept) STORED BY ICEBERG TBLPROPERTIES ('format-version'='2'); |

- CREATE TABLE AS SELECT (CTAS):

CREATE TABLE ice_ctas PARTITIONED BY SPEC (truncate(1000, id)) STORED BY ICEBERG TBLPROPERTIES ('format-version'='2') AS SELECT id, int_col, string_col FROM source_table; |

- CREATE TABLE LIKE:

(creates an empty desk based mostly on one other desk)

CREATE TABLE new_ice_tbl LIKE orig_ice_tbl;

|

Querying Iceberg tables

Impala helps studying V2 tables with place deletes.

Impala helps every kind of queries on Iceberg tables that it helps for another tables. E.g. joins, aggregations, analytical queries and so on. are all supported.

SELECT * FROM ice_t; SELECT depend(*) FROM ice_t i LEFT OUTER JOIN other_t b ON (i.id = other_t.fid) WHERE i.col = 42; |

It’s potential to question earlier snapshots of a desk (till they’re expired).

SELECT * FROM ice_t FOR SYSTEM_TIME AS OF '2022-01-04 10:00:00'; SELECT * FROM ice_t FOR SYSTEM_TIME AS OF now() - interval 5 days; SELECT * FROM ice_t FOR SYSTEM_VERSION AS OF 123456; |

We are able to use DESCRIBE HISTORY assertion to see what are the sooner snapshots of a desk:

DESCRIBE HISTORY ice_t FROM '2022-01-04 10:00:00'; DESCRIBE HISTORY ice_t FROM now() - interval 5 days; DESCRIBE HISTORY ice_t BETWEEN '2022-01-04 10:00:00' AND '2022-01-05 10:00:00'; |

Insert information into Iceberg tables

INSERT statements work for each V1 and V2 tables.

INSERT INTO ice_t VALUES (1, 2); INSERT INTO ice_t SELECT col_a, col_b FROM other_t; |

INSERT OVERWRITE ice_t VALUES (1, 2); INSERT OVERWRITE ice_t SELECT col_a, col_b FROM other_t; |

Load information into Iceberg tables

LOAD DATA INPATH '/tmp/some_db/parquet_files/' INTO TABLE iceberg_tbl; |

Alter Iceberg tables

ALTER TABLE ... RENAME TO ... (renames the desk) ALTER TABLE ... CHANGE COLUMN ... (change identify and sort of a column) ALTER TABLE ... ADD COLUMNS ... (provides columns to the top of the desk) ALTER TABLE ... DROP COLUMN ... |

ALTER TABLE ice_p SET PARTITION SPEC (VOID(i), VOID(d), TRUNCATE(3, s), HOUR(t), i); |

Snapshot administration

ALTER TABLE ice_tbl EXECUTE expire_snapshots('2022-01-04 10:00:00'); ALTER TABLE ice_tbl EXECUTE expire_snapshots(now() - interval 5 days); |

DELETE and UPDATE statements for Impala are coming in later releases. As talked about above, Impala is utilizing its personal C++ implementation to cope with Iceberg tables. This offers important efficiency benefits in comparison with different engines.

Future Work

Our assist for Iceberg v2 is superior and dependable, and we proceed our push for innovation. We’re quickly growing enhancements, so you’ll be able to anticipate finding new options associated to Iceberg in every CDW launch. Please tell us your suggestions within the feedback part under.

Abstract

Iceberg is an rising, extraordinarily fascinating desk format. It’s below fast improvement with new options coming each month. Cloudera Knowledge Warehouse added assist for the latest format model of Iceberg in its newest launch. Customers can run Hive and Impala digital warehouses and work together with their Iceberg tables through SQL statements. These engines are additionally evolving rapidly and we ship new options and optimizations in each launch. Keep tuned, you’ll be able to anticipate extra weblog posts from us about upcoming options and technical deep dives.

To be taught extra:

- Replay our webinar Unifying Your Knowledge: AI and Analytics on One Lakehouse, the place we talk about the advantages of Iceberg and open information lakehouse.

- Learn why the future of information lakehouses is open.

- Replay our meetup Apache Iceberg: Trying Under the Waterline.

Strive Cloudera Knowledge Warehouse (CDW) by signing up for a 60 day trial, or take a look at drive CDP. If you have an interest in chatting about Apache Iceberg in CDP, let your account workforce know or contact us immediately. As at all times, please present your suggestions within the feedback part under.

[ad_2]