[ad_1]

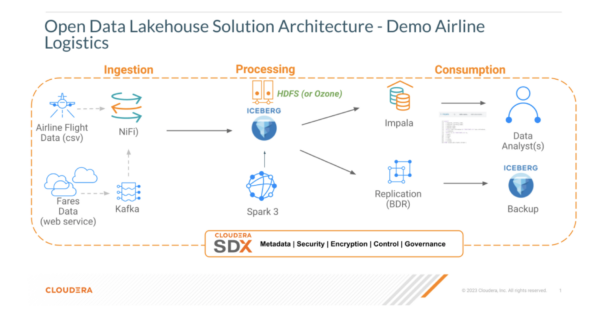

Cloudera lately launched a totally featured Open Information Lakehouse, powered by Apache Iceberg within the non-public cloud, along with what’s already been obtainable for the Open Information Lakehouse within the public cloud since final yr. This launch signified Cloudera’s imaginative and prescient of Iceberg in every single place. Clients can deploy Open Information Lakehouse wherever the info resides—any public cloud, non-public cloud, or hybrid cloud, and port workloads seamlessly throughout deployments.

With Cloudera Open Information Lakehouse within the non-public cloud, you possibly can profit from following key options:

- Multi-engine interoperability and compatibility with Apache Iceberg, together with NiFi, Flink and SQL Stream Builder (SSB), Spark, and Impala.

- Time Journey: Reproduce a question as of a given time or snapshot ID, which can be utilized for historic audits, validating ML fashions, and rollback of inaccurate operations, for instance.

- Desk Rollback: Permit customers to rapidly right issues by resetting tables to a very good state.

- Wealthy set of SQL (question, DDL, DML) instructions: Create or manipulate database objects, run queries, load and modify knowledge, carry out time journey operations, and convert Hive exterior tables to Iceberg tables utilizing SQL instructions.

- In-place desk (schema, partition) evolution: Effortlessly evolve Iceberg desk schema and partition layouts with out rewriting desk knowledge or migrating to a brand new desk, for instance.

- SDX Integration: Gives frequent safety and governance insurance policies, in addition to knowledge lineage and auditing.

- Iceberg Replication: Gives catastrophe restoration and desk backups.

- Straightforward portability of workloads to public cloud and again with none code refactoring.

On this multi-part weblog submit, we’re going to indicate you how you can use the most recent Cloudera Iceberg innovation to construct an Open Information Lakehouse on a personal cloud.

For this primary a part of the weblog collection we’ll give attention to ingesting streaming knowledge into the open knowledge lakehouse and Iceberg tables making it obtainable for additional processing that we’ll exhibit within the following blogs.

Answer Overview

Pre-requisites

The next parts in Cloudera Open Information Lakehouse on Personal Cloud must be put in and configured and airline knowledge units:

On this instance, we’re going to use NiFi as a part of CFM 2.1.6 to stream ingest knowledge units to Iceberg. Please notice, you too can leverage Flink and SQL Stream Builder in CSA 1.11 as nicely for streaming ingestion. We use NiFi to ingest an airport route knowledge set (JSON) and ship that knowledge to Kafka and Iceberg. We then use Hue/Impala to try the tables we created.

Please reference consumer documentation for set up and configuration of Cloudera Information Platform Personal Cloud Base 7.1.9 and Cloudera Stream Administration 2.1.6.

Observe the steps under for utilizing NiFi to stream ingest knowledge into Iceberg tables:

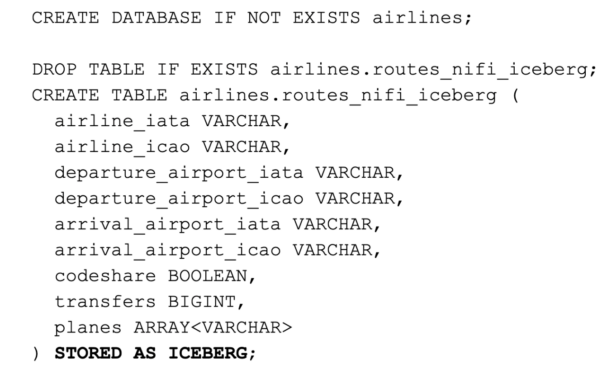

1- Create the routes Iceberg desk for NiFi ingestion in Hue/Impala execute the next DDL:

2- Obtain a pre-built stream definition file discovered right here:

https://github.com/jingalls1217/airways/blob/major/Datapercent20Flow/NiFiDemo.json

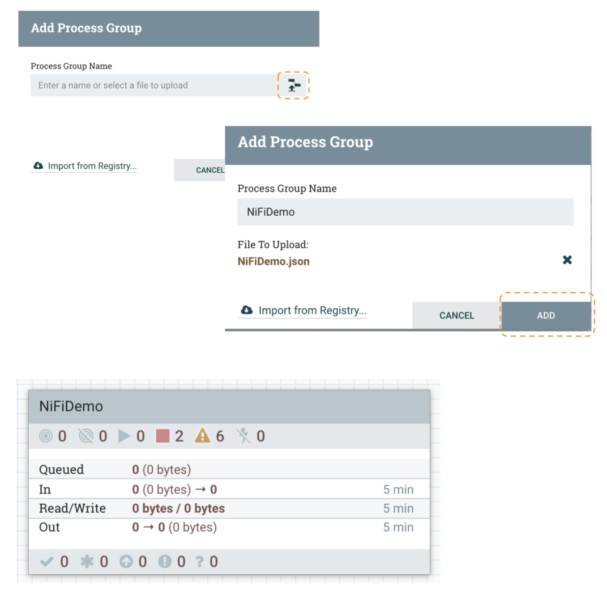

3-Create a brand new course of group in NiFi and add the stream definition file downloaded in step 2. First click on the Browse button, choose the NiFiDemo.json file and click on the Add button.

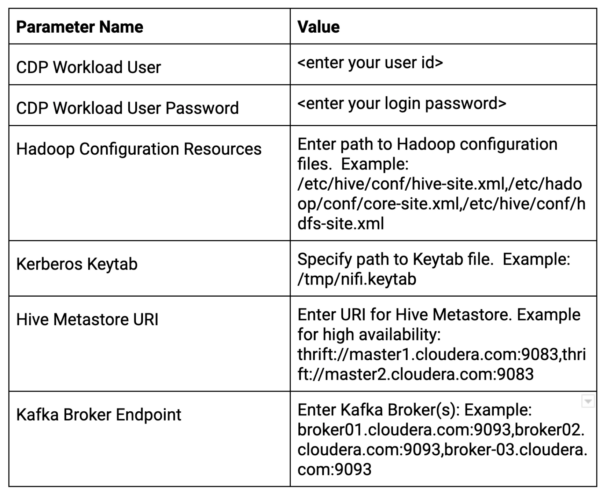

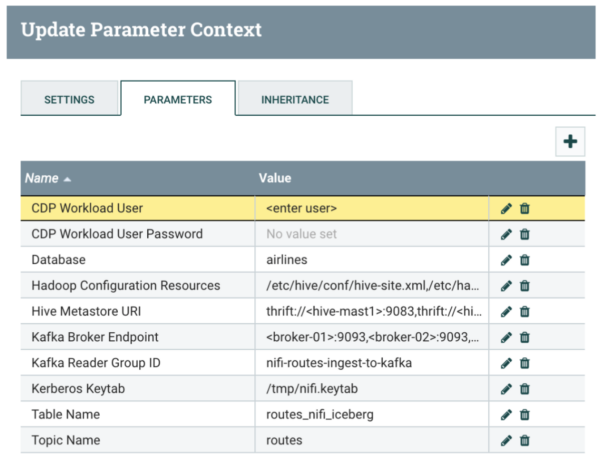

4- Replace parameters as proven in desk under:

5- Click on into the NiFiDemo course of group:

-

- Proper click on on the NiFi canvas, go to Configuration and allow the Controller Providers.

- Open every Course of Group and proper click on on the canvas, go to Configuration and Allow any extra Controller Providers not but enabled.

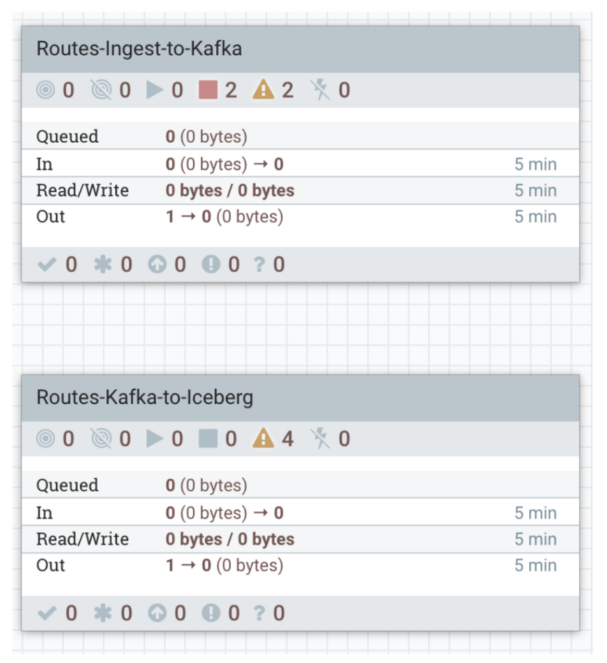

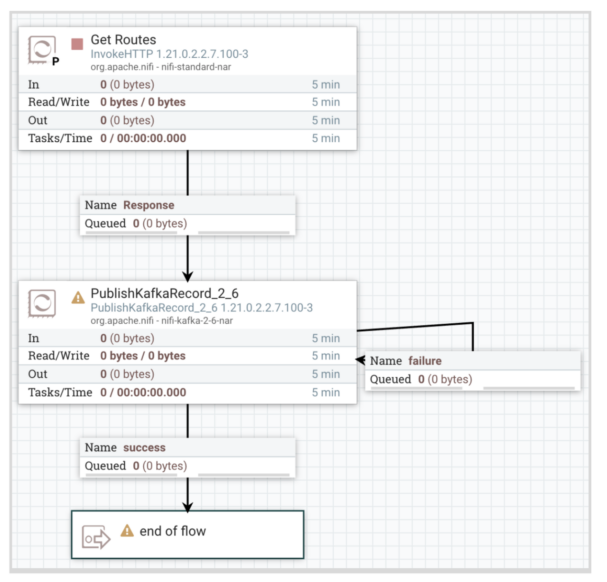

6- Begin the Routes ingest to Kafka stream and monitor success/failure queues:

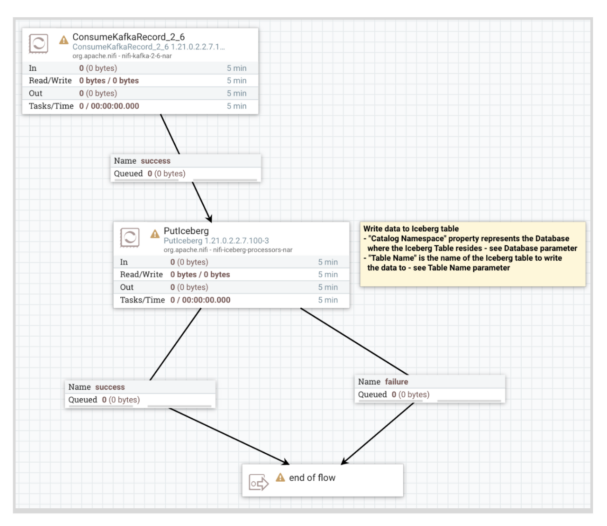

7- Begin the Routes Kafka to Iceberg stream and monitor success/failure queues:

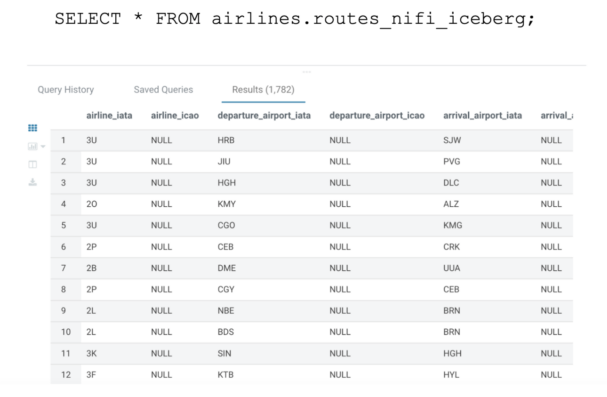

8- Examine the Routes Iceberg desk in Hue/Impala to see the info that has been loaded:

SELECT * FROM airways.routes_nifi_iceberg;

Conclusion

On this first weblog, we confirmed how you can use Cloudera Stream Administration (NiFi) to stream ingest knowledge on to the Iceberg desk with none coding. Keep tuned for half two, Information Processing with Apache Spark.

To construct an Open Information Lakehouse in your non-public cloud, obtain Cloudera Information Platform Personal Cloud Base 7.1.9 and observe our Getting Began weblog collection.

And since we provide the very same expertise in the private and non-private cloud you too can be a part of one in every of our Two hour hands-on-lab workshops to expertise the open knowledge lakehouse within the public cloud or join a free trial. In case you are curious about chatting about Cloudera Open Information Lakehouse, contact your account workforce. As at all times, we welcome your suggestions within the feedback part under.

[ad_2]