![[Big Book of MLOps Updated for Generative AI] [Big Book of MLOps Updated for Generative AI]](https://geeks-news.com/wp-content/uploads/https://www.databricks.com/static/og-databricks-58419d0d868b05ddb057830066961ebe.png)

[ad_1]

Final yr, we revealed the Huge E-book of MLOps, outlining guiding rules, design concerns, and reference architectures for Machine Studying Operations (MLOps). Since then, Databricks has added key options simplifying MLOps, and Generative AI has introduced new necessities to MLOps platforms and processes. We’re excited to announce a brand new model of the Huge E-book of MLOps protecting these product updates and Generative AI necessities.

This weblog submit highlights key updates within the eBook, which may be downloaded right here. We offer updates on governance, serving, and monitoring and focus on the accompanying design choices to make. We mirror these updates in improved reference architectures. We additionally embody a brand new part on LLMOps (MLOps for Massive Language Fashions), the place we focus on implications on MLOps, key parts of LLM-powered purposes, and LLM-specific reference architectures.

This weblog submit and eBook shall be helpful to ML Engineers, ML Architects, and different roles seeking to perceive the newest in MLOps and the affect of Generative AI on MLOps.

Huge E-book v1 recap

When you have not learn the unique Huge E-book of MLOps, this part provides a quick recap. The identical motivations, guiding rules, semantics, and deployment patterns type the idea of our up to date MLOps greatest practices.

Why ought to I care about MLOps?

We hold our definition of MLOps as a set of processes and automation to handle knowledge, code and fashions to fulfill the 2 objectives of steady efficiency and long-term effectivity in ML methods.

MLOps = DataOps + DevOps + ModelOps

In our expertise working with prospects like CareSource and Walgreens, implementing MLOps architectures accelerates the time to manufacturing for ML-powered purposes, reduces the chance of poor efficiency and non-compliance, and reduces long-term upkeep burdens on Knowledge Science and ML groups.

Guiding rules

Our guiding rules stay the identical:

- Take a data-centric method to machine studying.

- All the time hold your enterprise objectives in thoughts.

- Implement MLOps in a modular style.

- Course of ought to information automation.

The primary precept, taking a data-centric method, lies on the coronary heart of the updates within the eBook. As you learn beneath, you will note how our “Lakehouse AI” philosophy unifies knowledge and AI at each the governance and mannequin/pipeline layers.

Semantics of growth, staging and manufacturing

We construction MLOps by way of how ML property—code, knowledge, and fashions—are organized into phases from growth, to staging, and to manufacturing. These phases correspond to steadily stricter entry controls and stronger high quality ensures.

ML deployment patterns

We mentioned how code and/or fashions are deployed from growth in direction of manufacturing, and the tradeoffs in deploying code, fashions, or each. We present architectures for deploying code, however our steering stays largely the identical for deploying fashions.

For extra particulars on any of those subjects, please consult with the unique eBook.

What’s new?

On this part, we define the important thing product options that enhance our MLOps structure. For every of those, we spotlight the advantages they create and their affect on our end-to-end MLOps workflow.

Unity Catalog

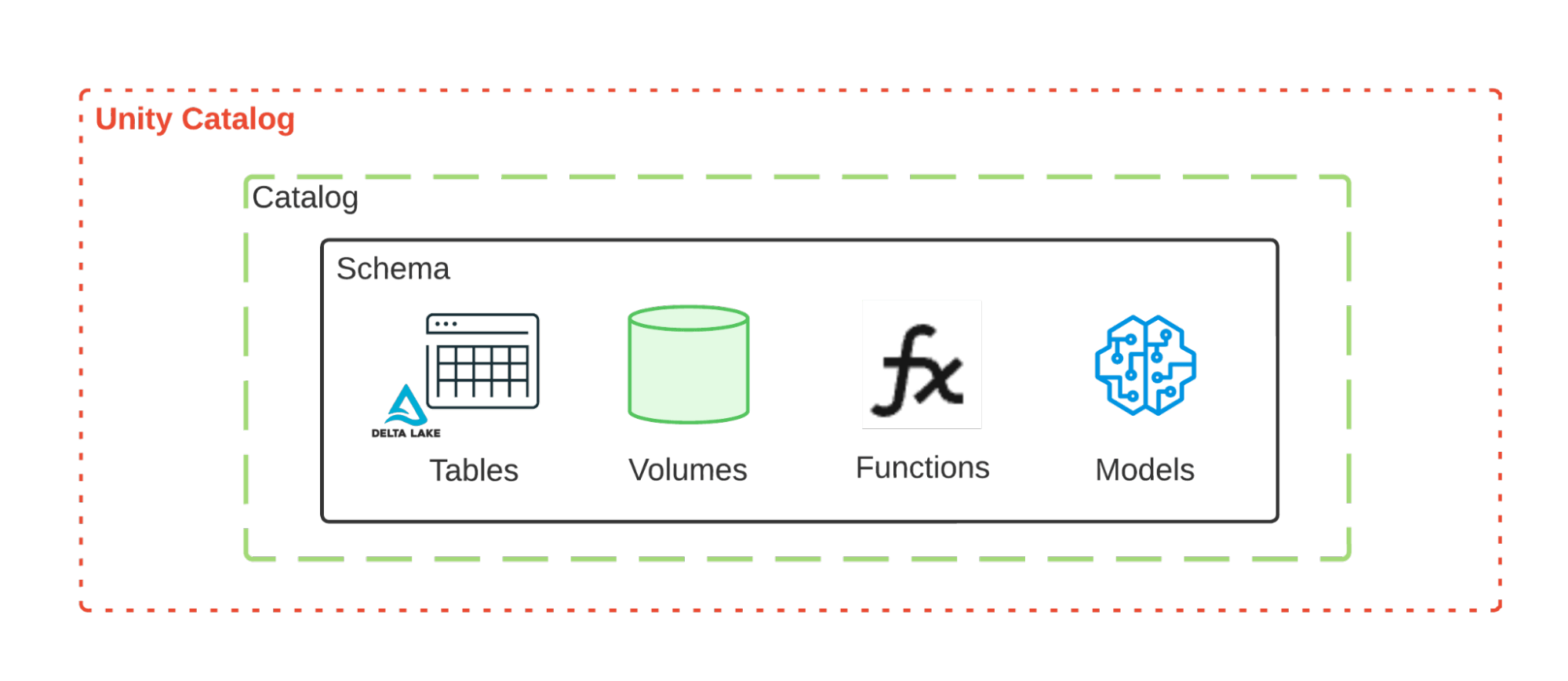

An information-centric AI platform should present unified governance for each knowledge and AI property on prime of the Lakehouse. Databricks Unity Catalog centralizes entry management, auditing, lineage, and knowledge discovery capabilities throughout Databricks workspaces.

Unity Catalog now contains MLflow Fashions and Function Engineering. This unification permits easier administration of AI initiatives which embody each knowledge and AI property. For ML groups, this implies extra environment friendly entry and scalable processes, particularly for lineage, discovery, and collaboration. For directors, this implies easier governance at challenge or workflow stage.

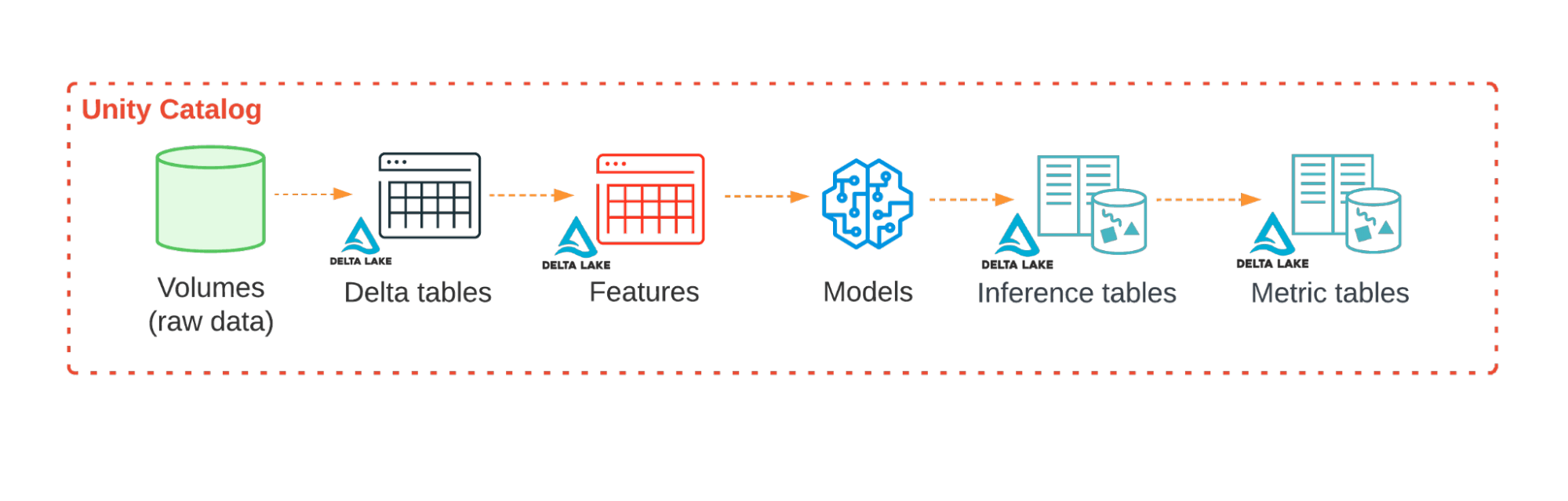

Belongings of an ML workflow, all managed by way of Unity Catalog, throughout a number of workspaces

Inside Unity Catalog, a given catalog accommodates schemas, which in flip might include tables, volumes, capabilities, fashions, and different property. Fashions can have a number of variations and may be tagged with aliases. Within the eBook, we offer really helpful group schemes for AI initiatives on the catalog and schema stage, however Unity Catalog has the flexibleness to be tailor-made to any group’s present practices.

Mannequin Serving

Databricks Mannequin Serving gives a production-ready, serverless answer to simplify real-time mannequin deployment, behind APIs to energy purposes and web sites. Mannequin Serving reduces operational prices, streamlines the ML lifecycle, and makes it simpler for Knowledge Science groups to give attention to the core process of integrating production-grade real-time ML into their options.

Within the eBook, we focus on two key design choice areas:

- Pre-deployment testing ensures good system efficiency and customarily contains deployment readiness checks and cargo testing.

- Actual-time mannequin deployment ensures good mannequin accuracy (or different ML efficiency metrics). We focus on methods together with A/B testing, gradual rollout, and shadow deployment.

We additionally focus on implementation particulars in Databricks, together with:

Lakehouse Monitoring

Databricks Lakehouse Monitoring is a data-centric monitoring answer to make sure that each knowledge and AI property are of top of the range and dependable. Constructed on prime of Unity Catalog, it gives the distinctive potential to implement each knowledge and mannequin monitoring, whereas sustaining lineage between the info and AI property of an MLOps answer. This unified and centralized method to monitoring simplifies the method of diagnosing errors, detecting high quality drift, and performing root trigger evaluation.

The eBook discusses implementation particulars in Databricks, together with:

MLOps Stacks and Databricks asset bundles

MLOps Stacks are up to date infrastructure-as-code options which assist to speed up the creation of MLOps architectures. This repository gives a customizable stack for beginning new ML initiatives on Databricks, instantiating pipelines for mannequin coaching, mannequin deployment, CI/CD, and others.

MLOps Stacks are constructed on prime of Databricks asset bundles, which outline infrastructure-as-code for knowledge, analytics, and ML. Databricks asset bundles will let you validate, deploy, and run Databricks workflows akin to Databricks jobs and Delta Reside Tables, and to handle ML property akin to MLflow fashions and experiments.

Reference architectures

The up to date eBook gives a number of reference architectures:

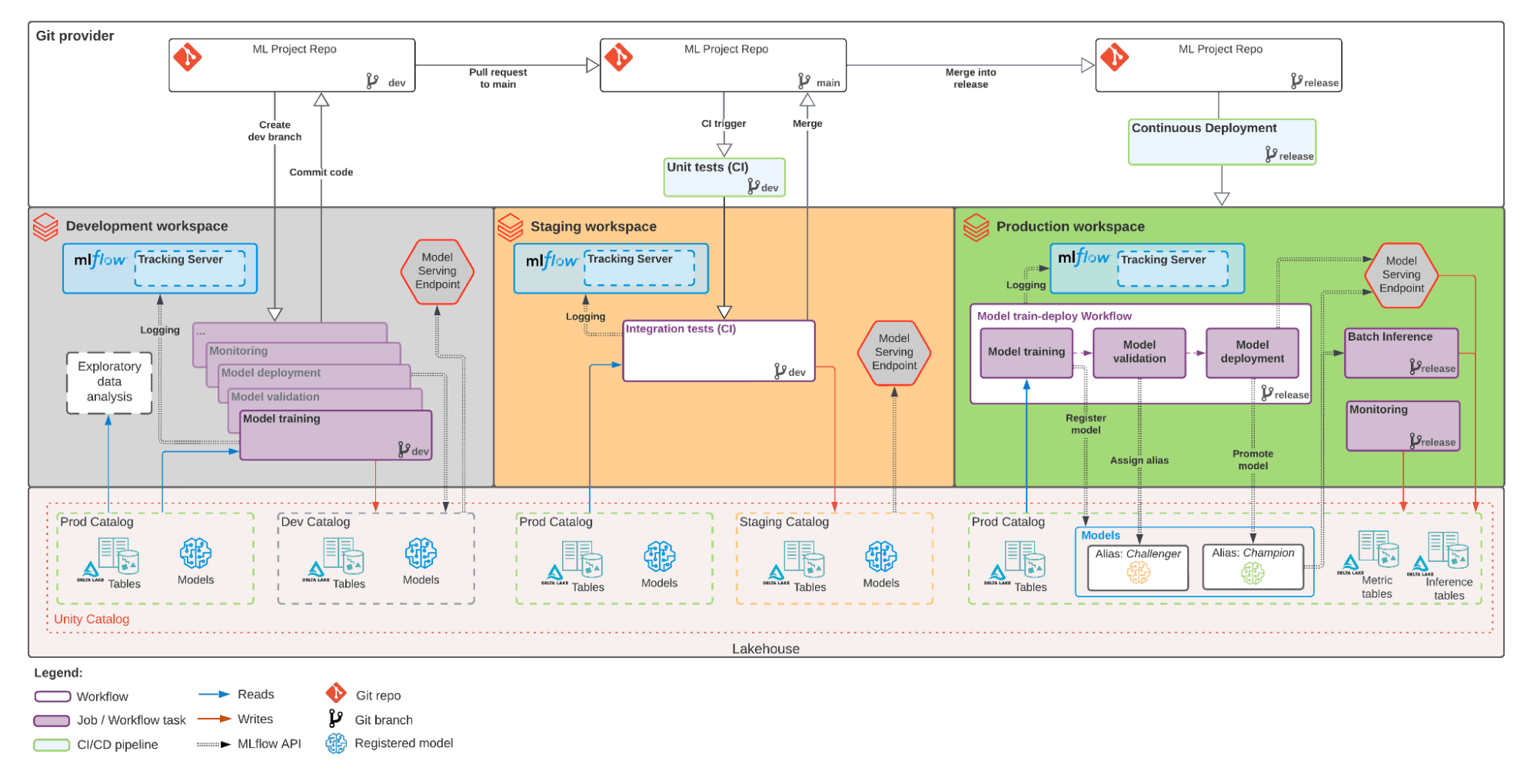

- Multi-environment view: This high-level view reveals how the event, staging, and manufacturing environments are tied collectively and work together.

- Improvement: This diagram zooms in on the event technique of ML pipelines.

- Staging: This diagram explains the unit checks and integration checks for ML pipelines.

- Manufacturing: This diagram particulars the goal state, exhibiting how the varied ML pipelines work together.

Under, we offer a multi-environment view. A lot of the structure stays the identical, however it’s now even simpler to implement with the newest updates from Databricks.

- High-to-bottom: The three layers present code in Git (prime) vs. workspaces (center) vs. Lakehouse property in Unity Catalog (backside).

- Left-to-right: The three phases are proven in three completely different workspaces; that isn’t a strict requirement however is a standard approach to separate phases from growth to manufacturing. The identical set of ML pipelines and providers are utilized in every stage, initially developed (left) earlier than being examined in staging (center) and at last deployed to manufacturing (proper).

The principle architectural replace is that each knowledge and ML property are managed as Lakehouse property within the Unity Catalog. Be aware that the massive enhancements to Mannequin Serving and Lakehouse Monitoring haven’t modified the structure, however make it easier to implement.

LLMOps

We finish the up to date eBook with a brand new part on LLMOps, or MLOps for Massive Language Fashions (LLMs). We communicate by way of “LLMs,” however many greatest practices translate to different Generative AI fashions as properly. We first focus on main modifications launched by LLMs after which present detailed greatest practices round key parts of LLM-powered purposes. The eBook additionally gives reference architectures for widespread Retrieval-Augmented Technology (RAG) purposes.

What modifications with LLMs?

The desk beneath is an abbreviated model of the eBook desk, which lists key properties of LLMs and their implications for MLOps platforms and practices.

| Key properties of LLMs | Implications for MLOps |

|---|---|

Implications for MLOps

|

Improvement course of: Initiatives usually develop incrementally, ranging from present, third-party or open supply fashions and ending with customized fashions (fine-tuned or totally educated on curated knowledge). |

| Many LLMs take normal queries and directions as enter. These queries can include fastidiously engineered “prompts” to elicit the specified responses. |

Improvement course of: Immediate engineering is a brand new vital a part of creating many AI purposes. Packaging ML artifacts: LLM “fashions” could also be various, together with API calls, immediate templates, chains, and extra. |

| Many LLMs may be given prompts with examples or context. | Serving infrastructure: When augmenting LLM queries with context, it’s useful to make use of instruments akin to vector databases to seek for related context. |

| Proprietary and OSS fashions can be utilized by way of paid APIs. | API governance: You will need to have a centralized system for API governance of fee limits, permissions, quota allocation, and price attribution. |

| LLMs are very massive deep studying fashions, usually starting from gigabytes to a whole bunch of gigabytes. |

Serving infrastructure: GPUs and quick storage are sometimes important. Price/efficiency trade-offs: Specialised methods for decreasing mannequin dimension and computation have turn into extra vital. |

| LLMs are arduous to judge by way of conventional ML metrics since there’s usually no single “proper” reply. | Human suggestions: This suggestions needs to be included immediately into the MLOps course of, together with testing, monitoring, and capturing to be used in future fine-tuning. |

Key parts of LLM-powered purposes

The eBook features a part for every matter beneath, with detailed explanations and hyperlinks to assets.

- Immediate engineering: Although many prompts are particular to particular person LLM fashions, we give some ideas which apply extra typically.

- Leveraging your personal knowledge: We offer a desk and dialogue of the continuum from easy (and quick) to advanced (and highly effective) for utilizing your knowledge to achieve a aggressive edge with LLMs. This ranges from immediate engineering, to retrieval augmented era (RAG), to fine-tuning, to full pre-training.

- Retrieval augmented era (RAG): We focus on this most typical kind of LLM utility, together with its advantages and the everyday workflow.

- Vector database: We focus on vector indexes vs. vector libraries vs. vector databases, particularly for RAG workflows.

- High-quality-tuning LLMs: We focus on variants of fine-tuning, when to make use of it, and state-of-the-art methods for scalable and resource-efficient fine-tuning.

- Pre-training: We focus on when to go for full-on pre-training and reference state-of-the artwork methods for dealing with challenges. We additionally strongly encourage using MosaicML Coaching, which mechanically handles most of the complexities of scale.

- Third-party APIs vs. self-hosted fashions: We focus on the tradeoffs round knowledge safety and privateness, predictable and steady habits, and vendor lock-in.

- Mannequin analysis: We contact on the challenges on this nascent subject and focus on benchmarks, utilizing LLMs as evaluators, and human analysis.

- Packaging fashions or pipelines for deployment: With LLM purposes utilizing something from API calls to immediate templates to advanced chains, we offer recommendation on utilizing MLflow Fashions to standardize packaging for deployment.

- LLM Inference: We offer ideas round real-time inference and batch inference, together with utilizing massive fashions.

- Managing value/efficiency trade-offs: With LLMs being massive fashions, we dedicate this part to decreasing prices and enhancing efficiency, particularly for inference.

Get began updating your MLOps structure

This weblog is merely an summary of the reasons, greatest practices, and architectural steering within the full eBook. To study extra and to get began on updating your MLOps platform and practices, we advocate that you just:

- Learn the up to date Huge E-book of MLOps. All through the eBook, we offer hyperlinks to assets for particulars and for studying extra about particular subjects.

- Make amends for the Knowledge+AI Summit 2023 talks on MLOps, together with:

- Learn and watch about success tales:

- Communicate together with your Databricks account staff, who can information you thru a dialogue of your necessities, assist to adapt this reference structure to your initiatives, and have interaction extra assets as wanted for coaching and implementation.

[ad_2]