相关搜索

SONE-501 AV历史,纯白皮肤,美肌,4K相机拍摄还有AV历史感!身材姣好的美乳正妹「宇野美咲」

日本有码 - 2025-01-14

SONE-515 纯粹的状态和准备、夏日的天空、性恋物和极度开放3系列

日本有码 - 2025-01-14

SONE-512 潮吹是一件很微妙的事情! !天空的大痉挛的大痉挛是性玛克戈吉

日本有码 - 2025-01-14

SONE-492 云能仁金松智珉的一生,人生之初充满禁欲,自我约束·すっぴん·プライベートを公开Kakurして

日本有码 - 2025-01-14

SONE-495元系统台场地球波Aidara Isaken第二章 Ariel Ishida Karin

日本有码 - 2025-01-14

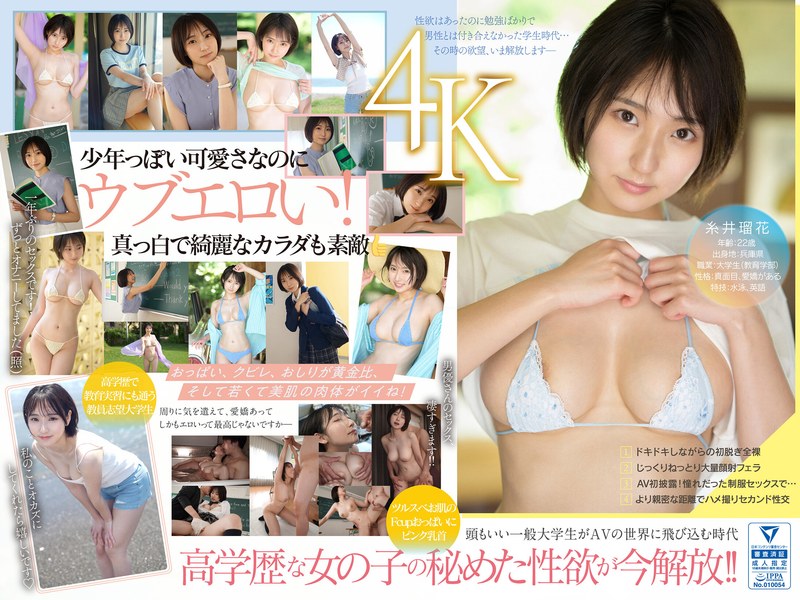

SONE-568 新一号STYLE 时代贤淑少女!如果教育之美是实习生!糸井瑠香AB室

日本有码 - 2025-01-14

SONE-496 跳高运动员一筋打算在15年后退役!跃入舞蹈世界的美,就是人间的美。

日本有码 - 2025-01-14

SONE-519 新人 NO.1STYLE 気品ある犊け大人エロス ビューティードバイザー 伊本しおり (26)

日本有码 - 2025-01-14

SORA-576 隠れマゾ见つけた。房屋遭抢劫扣押,屋后被盗。

日本有码 - 2025-01-14

SORA-572 隠れマゾ见つけた。叫声

日本有码 - 2025-01-14

SORA-575 世界直人与令和诺拉的私仇与对外制裁

日本有码 - 2025-01-14

SONE-560 S1 PRECIOUS GIRLS 2024 オールスター 24人合集 ハーremuairan-cd2

日本有码 - 2025-01-14

SONE-494 「あなたの邻居にいたかもしれない」纯白おっぱい和敏感的乳房Jkappu超能力200点furukosu

日本有码 - 2025-01-14

![SORA-573 家庭主妇,睡眠强奸,Kurara - 4人 - [毫不留情的口交] [ナマなま] [Reiwa的胜利之手,Shi Nao]所有参与者都收到中出,睡眠强奸](https://uqetyzxa.com/20250113/tmoYpHXG/1.jpg)

SORA-577 マゾHumanoid にfall ちた秋広さん家のママ セックsuresuでdanna に女として认出

日本有码 - 2025-01-14

SONE-560 S1 PRECIOUS GIRLS 2024 オールスター合集 24 首 ハーremuairan-cd1

日本有码 - 2025-01-14

SOAN-110 肛交カウンセringu学校帰りにタコ部屋娐ラマキ无色酔による两孔必须插在一块,编号験号017

日本有码 - 2025-01-13

SOAV-119 已婚女人的浮动心

日本有码 - 2025-01-13

SMJB-005 被盗的云南任登记妻子的照片,顶级女孩。 W灆ク&W亲密接触嫫き、男性身心をくすぐ

日本有码 - 2025-01-13

SMKCX-011 完全昏迷-4人- ≪极楽≫チariーディング#じょうきゅうせい地上部#女一愿之学校#商気ツインテ

日本有码 - 2025-01-13

SONE-435 弟子的仆人疯狂,导师的导师本质邪恶。

日本有码 - 2025-01-13

![SONE-449 美丽可爱的女孩 - Kirara Kazuya [美脸·美乳·美乳·美腿]でヌキまくる极紧密メンズエsute](https://uqetyzxa.com/20250112/PYWAQaiE/1.jpg)

SONE-449 美丽可爱的女孩 - Kirara Kazuya [美脸·美乳·美乳·美腿]でヌキまくる极紧密メンズエsute

日本有码 - 2025-01-13

烟雾-008腿 テクでも抜き

日本有码 - 2025-01-13

SONE-453 妻子的巨乳和NTR的急速女友 订婚合同是给最爱她的女孩的。

日本有码 - 2025-01-13

SONE-444是一个穿着制服的柔弱女孩。

日本有码 - 2025-01-13

SONE-155 新俱乐部的新成员、中年组长和2人宾馆。

日本有码 - 2025-01-13

SONE-421 刺激220倍!挤压6690次! Ekisio 4400cc!大学生「优秀BODY」Eruko觉醒

日本有码 - 2025-01-13

SONE-452いつも见下ろしてたBoss女身高148cm,のミニカワ...交叉られ,看下され,胸部负责骑乘姿势でもヌ

日本有码 - 2025-01-13

SONE-460 一家人想要一个妹妹和一个弟弟。兴きこもった治疗哥哥的性欲が日式なヤン

日本有码 - 2025-01-13

SONE-456 【うんぱい】を生み出した影の Dominator に逆らえないprivateは 今天もグズ 男士专用 マゾペtto 肉玩具

日本有码 - 2025-01-13

SONE-461 可爱がってる新男成员が结婚报告してきてジェラシー逃跑!好的,好的,悬挂,顶部位置,暨,暨

日本有码 - 2025-01-13